Author

Eugene Zeien, BS Applied Physics 1991 18 years experience in data analysis & IT support at the University of Iowa.

Abstract

Having heard, so frequently, that the data underlying the current consensus was robustly supportive, I decided to take the time to find raw, unadjusted data and undertake some simple analyses. I was quite surprised by the results. I am posting those here for comments and suggestions, along with source code and links to the raw data.

The majority of climate researchers use the adjusted data in their work, because CRU, GISS, and NCDC make the adjusted data easily accessible and easy to use. Since evidence has surfaced which suggests those three entities are not independent, all three adjustment methods may be suspect. Let’s take a look.

Methods

Starting with a home PC, I installed Sun’s VirtualBox. Next, since my experience is primarily Linux/Unix based, I installed Ubuntu 9.10 on a virtual 40Gb disk. GHCN maintains a nice, though hard to find, ftp repository of raw climate station data, which was downloaded and decompressed. The documentation in readme.txt was fairly easy to follow.

As a first pass, all the stations available in GHCN were included. Temperature data was combined into a bin from all the stations within a geographical 1×1 degree sector. This methodology allows for station relocation and overlap with minimal impact upon the results. An annual temperature was computed for a sector with more than 240 daily readings within that year. 240 was a completely arbitrary decision, based upon the reasoning that the stations with high dropout rates are in the most inhospitable regions.

#!/bin/bash

# Monthly data

#wget -Nr ftp://ftp.ncdc.noaa.gov/pub/data/ghcn/v2 -o monthly.log

#find ftp.ncdc.noaa.gov/pub/data/ghcn -name \*.Z -exec uncompress '{}' \;

# Daily data

wget -Nr --exclude-directories=grid ftp://ftp.ncdc.noaa.gov/pub/data/ghcn/daily -o daily.log

H=`pwd`

# Start with list of stations' coordinates & codes

# ghcnd-stations.txt: List of stations and their metadata (e.g., coordinates)

# Contents look like:

# ID Lat Long Elev State Name GSNflag HCNflag WMOid

# AJ000037749 40.7000 47.7000 95.0 GEOKCAY 37749

# Look up min & max temps from ${StationID}.dly files

# ex: "AJ000037749.dly" line 1 looks like:

# YearMo Day1 Day2 Day3 ...

# AJ000037749193602PRCP 0 I 17 I 0 I 0 I 0 I 0 I 0 I 78 I 0 I 0 I 0 I 0 I 0 I 20 I 0 I 0 I 0 I 0 I 0 I 0 I 0 I 12 I 0 I 0 I 0 I 0 I 0 I 0 I 0 I-9999 -9999

# AJ000037749200910TMAX 240 S 224 S 252 S 256 S 250 S 250 S 239 S 212 S 220 S 221 S 225 S 239 S-9999 253 S 256 S 231 S 239 S 240 S 230 S 231 S 229 S 230 S 231 S-9999 243 S-9999 181 S 187 S 212 S 170 S 173 S

# Build list of Lat Lon pairs to check for data

cd ftp.ncdc.noaa.gov/pub/data/ghcn/daily/

awk '{printf("%d %d\n",$2,$3)}' ghcnd-stations.txt | sort -u | grep . > ${H}/Coords.txt

# Going to populate a 180x360 degree grid

# anything from X.0 - X.99 goes into X's box

echo -n "" > ${H}/TAvg_1x1.txt

let Lines=`cat ${H}/Coords.txt | wc -l`

let C=0

while [ $C -lt $Lines ] ; do

let C++

Coords=(`awk 'NR=='$C'{print $0; exit}' ${H}/Coords.txt`)

Lat=${Coords[0]} ; Lon=${Coords[1]}

## select stations with this Lat & Lon

stations=(`awk -v lat=$Lat -v lon=$Lon '\

(lat+0.0<=$2)&&($2<lat+1.0)&&\

(lon+0.0<=$3)&&($3<lon+1.0){print $1}' ghcnd-stations.txt`)

if [ ${#stations[@]} -gt 0 ] ; then

printf '+ %s %s %s\n' $Lat $Lon ${#stations[@]}

else

printf ' %s %s\n' $Lat $Lon

fi

## Poll stations for years with temperature data. -9999 is missing value.

Tstations=()

for S in ${stations[@]} ; do

Tstations=(${Tstations[@]} `awk '$1~/TMAX/{print FILENAME; exit}' all/${S}.dly`)

done

if [ ${#Tstations[@]} -eq 0 ] ; then

let Lon++

continue

fi

printf 'T %s %s %s\n' $Lat $Lon ${#Tstations[@]}

## Would be best to pair up TMIN & TMAX

## Parsing is FUN!

## Output file should be Lat Lon Year TAvg (divided by 10.0 to remove builtin T*10)

## Added a minimum N>240 to avoid regions with one temperature obs/year 😉

awk '{St=substr($0,0,11);Yr=substr($0,12,4);Mo=substr($0,16,2);}\

$1~/TMAX/{l=length($0); d=1; \

for(i=22;i<l;i+=8){\

mx=substr($0,i,5)/10.0;\

if((mx<100)&&(mx>(-100))){\

tmx[Yr]+=mx; Nx[Yr]++; \

if(D[Yr,Mo,d]==""){\

D[Yr]++; Day[Yr,Mo,d]=1;\

}\

}\

d++}}\

$1~/TMIN/{l=length($0); d=1; \

for(i=22;i<l;i+=8){\

mn=substr($0,i,5)/10.0;\

if((mn<100)&&(mn>(-100))){tmn[Yr]+=mn; Nn[Yr]++;}\

d++}}\

END{for(i in tmx){\

if((tmn[i]!="")&&(D[i]>240)){ \

print "'$Lat'","'$Lon'",i,((tmx[i]/Nx[i])+(tmn[i]/Nn[i]))/2.0}\

}}' ${Tstations[@]} | sort -n >> ${H}/TAvg_1x1.txt

done

awk '{Temp[$3]+=$4;n[$3]++}\

END{for(i in Temp){print i,Temp[i]/n[i]}}' ${H}/TAvg_1x1.txt > ${H}/TAvg_Globe.txt

exit

In order to minimize the effect of temperature stations appearing and dropping out, sectors were selected which had continuous annual temperature data from 1900 to 2009. The data from the 613 sectors has a fairly strong sinusoidal pattern. The poorly correlated linear trend is probably related to the point in the wave’s peaks and troughs where the data begins and ends. Do not copy this graph, I have an idea…

Correlation 0.43 using a 30 year sine wave combined with a 0.3/century drop in temperature.

## This is a follow-up from the previous processing script.

## Sift out sector that are have measures for all of 1900-1999

awk '{Lat=$1; Lon=$2; Year=$3;\

Coords[Lat,Lon]=1; T[Lat,Lon,Year]=$4;\

}\

END{\

for(C in Coords){\

Bad=0;\

for(Y=1900; Y<=1999; Y++){\

if(T[C,Y]==""){Bad=1}\

}\

if(Bad==0){\

for(Y=1900; Y<=2009; Y++){Keep[C,Y]+=T[C,Y]; N[C,Y]++}\

}\

}\

print "Year Celsius";\

for(Y=1900; Y<=2009; Y++){\

for(C in Coords){\

if(N[C,Y]!=0){AnnualT[Y]+=(Keep[C,Y]/N[C,Y]); Nsec[Y]++}\

}\

print Y,AnnualT[Y]/Nsec[Y],Y,Nsec[Y];\

}\

}' ${H}/TAvg_1x1.txt > ${H}/Continuous_TAvg_Globe.txt

The reason 1900 was chosen is due to the increase in the number of stations just prior to the turn of the century. The next two graphs do not represent the 613 sectors used above. I will post source code.. tomorrow?

Intuitively, the monthly average temperature oscillates with the Northern seasons.

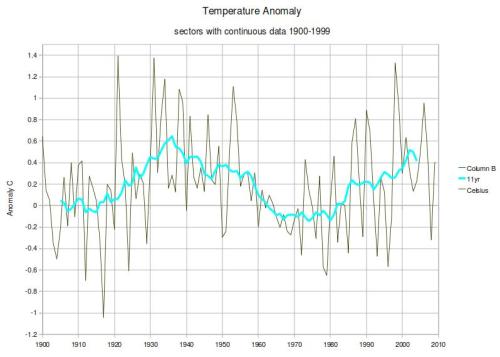

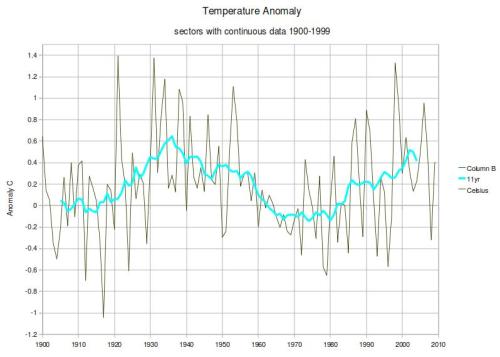

Having established a basic overview of what the raw data looks like, now it is time to look at the temperature anomalies. Starting with the 1×1 degree sector data, those with continuous temperatures 1900-1999 were used to demonstrate the effect of transforming and averaging raw temperatures across the globe versus averaging anomalies(base 1961-1990). This graph illustrates the wild fluctuations of land temperature anomalies from one year to the next and the 11 year moving average.

## Get sectors with complete 1900-1999 data, compute anomalies (base 1961-1990)

## 11 yr average is easily done in spreadsheet, so that's not here

awk '{Lat=$1; Lon=$2; Year=$3;\

Coords[Lat,Lon]=1; T[Lat,Lon,Year]=$4;\

}\

END{\

for(C in Coords){\

Bad=0;\

for(Y=1900; Y<=1999; Y++){\

if(T[C,Y]==""){Bad=1}\

}\

if(Bad==0){\

for(Y=1900; Y<=2009; Y++){Keep[C,Y]+=T[C,Y]; N[C,Y]++}\

for(Y=1961; Y<=1990; Y++){BaseSum[C]+=T[C,Y]; BaseN[C]++}\

Base[C]=BaseSum[C]/BaseN[C];\

}\

}\

print "Year Celsius";\

for(Y=1900; Y ${H}/Continuous_Anomaly_Globe.txt

How does an anomaly differ from real temperature minus a constant? Surprise! A constant was chosen that left the two plots offset by 0.1 C.

How does the temperature data go from the chaotic, variable state seen above to the relatively quiet plot posted by GISS:

Note the subtitle (Meteorological Stations). Clearly, this does not include ocean data. Other GISS graphs are available here.

How do the GISS computed anomalies compare with unadjusted anomalies? The 1961-1990 mean from the GISS anomalies was 0.09, whereas the 1961-1990 mean from my anomalies was 0.00. Clearly, the GISS data is adjusted further after the conversion to anomalies.

Clearly, something needs to be done to reduce the variance in the raw temperature data. Let’s take a look at temperature within 10 degree latitude bands, i.e. latitude 80 is 75 to 84.99. Using all sectors with 240 days of data within the year:

## First pass, average temp by latitude x10

awk '{if($1 < 0){Lat=int(($1-5)/10)}else{Lat=int(($1+5)/10)} \

Temp[Lat,$3]+=$4;n[Lat,$3]++; Years[$3]=1; Lats[Lat]=1;}\

END{printf("Year ");\

for(L=90; L>=-90; L-=10){printf("Lat=%d ",L);}\

printf("\n");\

for(Y=1900; Y<=2009; Y++){\

printf("%d ",Y);\

for(L=9; L>=-9; L-=1){\

printf("%f ",Temp[L,Y]/(n[L,Y]*1.0));\

}\

printf("\n");\

}\

}' ${H}/TAvg_1x1.txt > ${H}/TAvg_byLat_Globe.txt

Two surprises: there was no data in the range -45 to -84.99 latitude, and none above 80 latitude. The entire Lat=80 band is data from +75 to +79.99. At last, a warming trend has been found. Perhaps this graph is easier to read. Latitudes with no data have been eliminated. The legend has been rearranged with equatorial latitudes at the top, near their temperature plots.

The Arctic trend will be considered in depth here. https://justdata.wordpress.com/arctic-trends/

UHI effects will be considered as well. This one is too good to hide, greater NYC area versus 40N latitude stations and 35N to 45N stations.

## Gather up all the sectors in the 40.0 to 40.99 Latitude range

## Odd side-effect of my gridding method,

## NY NEW YORK CNTRL PRK lat=40.7800 long=-73.9700

## ends up in the lat=40 long=-74 sector.

awk 'BEGIN{print "Year 40N 35-45N NYC"}\

(($1==40) && ($2!=(-74)))){Avg[$3]+=$4; N[$3]++}\

(($1>=35)&&($1<=45)){Zavg[$3]+=$4; Zn[$3]++}\

(($1==40)&&($2==(-74))){NYt[$3]+=$4; NYn[$3]++}\

END{\

for(Y in Avg){print Y,Avg[Y]/N[Y],Zavg[Y]/Zn[Y],NYt[Y]/NYn[Y]}\

}' TAvg_1x1.txt | sort -n > 40N_latitude3.txt